Create Model Providers

Creating a Model Type Plugin The first step in creating a Model type plugin is to initialize the plugin project and create the model provider file, followed by integrating specific predefined/custom models.

Prerequisites

Dify plugin scaffolding tool

Python environment, version ≥ 3.12

For detailed instructions on preparing the plugin development scaffolding tool, please refer to Initializing Development Tools.

Create New Project

In the current path, run the CLI tool to create a new dify plugin project:

./dify-plugin-darwin-arm64 plugin initIf you have renamed the binary file to dify and copied it to the /usr/local/bin path, you can run the following command to create a new plugin project:

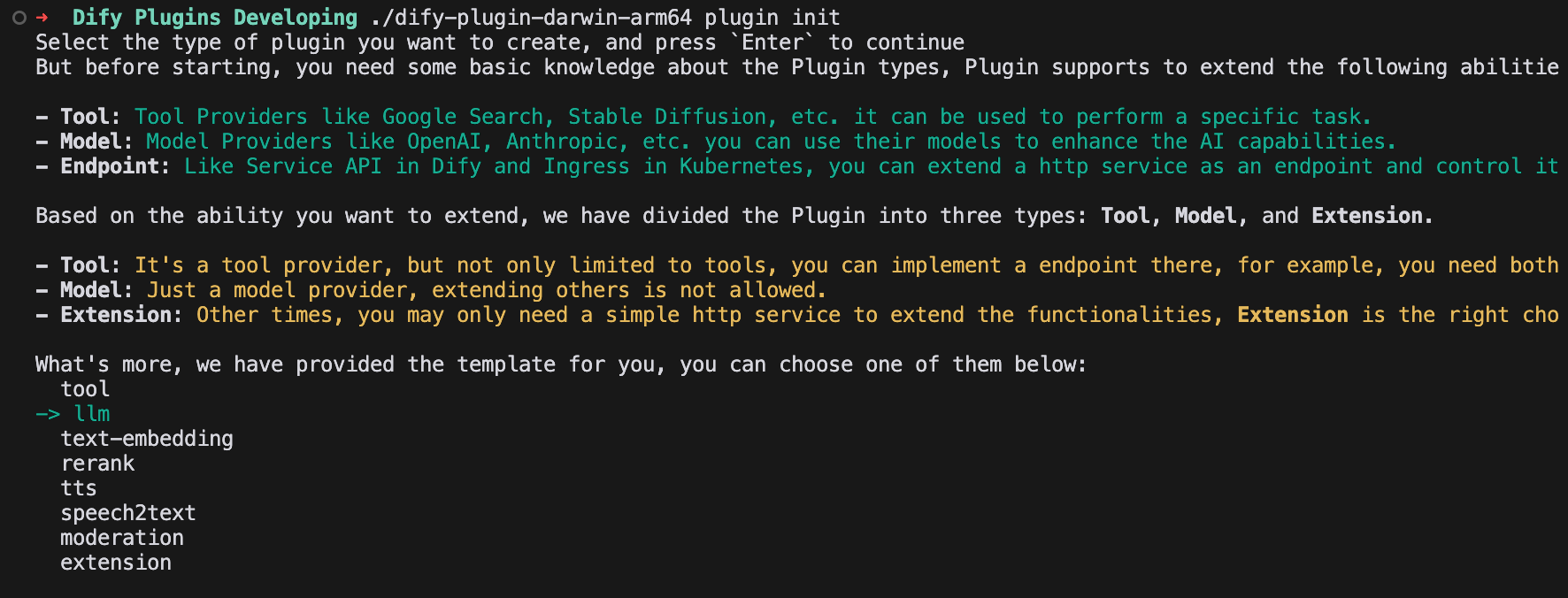

dify plugin initChoose Model Plugin Template

Plugins are divided into three types: tools, models, and extensions. All templates in the scaffolding tool provide complete code projects. This example will use an LLM type plugin.

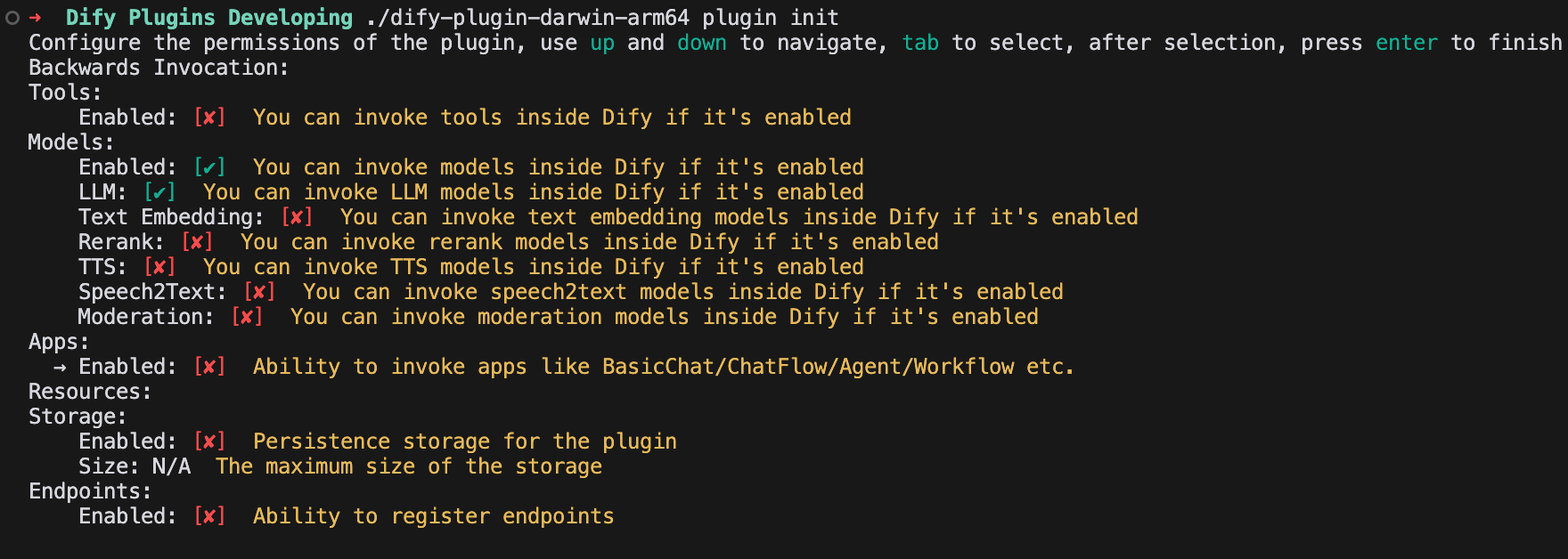

Configure Plugin Permissions

Configure the following permissions for this LLM plugin:

Models

LLM

Storage

Model Type Configuration

Model providers support three configuration methods:

predefined-model: Common large model types, only requiring unified provider credentials to use predefined models under the provider. For example, OpenAI provider offers a series of predefined models like gpt-3.5-turbo-0125 and gpt-4o-2024-05-13. For detailed development instructions, refer to Integrating Predefined Models.

customizable-model: You need to manually add credential configurations for each model. For example, Xinference supports both LLM and Text Embedding, but each model has a unique model_uid. To integrate both, you need to configure a model_uid for each model. For detailed development instructions, refer to Integrating Custom Models.

These configuration methods can coexist, meaning a provider can support predefined-model + customizable-model or predefined-model + fetch-from-remote combinations.

Adding a New Model Provider

Here are the main steps to add a new model provider:

Create Model Provider Configuration YAML File

Add a YAML file in the provider directory to describe the provider's basic information and parameter configuration. Write content according to ProviderSchema requirements to ensure consistency with system specifications.

Write Model Provider Code

Create provider class code, implementing a Python class that meets system interface requirements for connecting with the provider's API and implementing core functionality.

Here are the full details of how to do each step.

1. Create Model Provider Configuration File

Manifest is a YAML format file that declares the model provider's basic information, supported model types, configuration methods, and credential rules. The plugin project template will automatically generate configuration files under the /providers path.

Here's an example of the anthropic.yaml configuration file for Anthropic:

If the accessing vendor provides a custom model, such as OpenAI provides a fine-tuned model, you need to add the model_credential_schema field.

The following is sample code for the OpenAI family of models:

For a more complete look at the Model Provider YAML specification, see Schema for details.

2. Write model provider code

Create a python file with the same name, e.g. anthropic.py, in the /providers folder and implement a class that inherits from the __base.provider.Provider base class, e.g. AnthropicProvider. The following is the Anthropic sample code:

Vendors need to inherit the __base.model_provider.ModelProvider base class and implement the validate_provider_credentials vendor uniform credentials validation method, see AnthropicProvider.

Of course, it is also possible to reserve the validate_provider_credentials implementation first and reuse it directly after the model credentials verification method is implemented. For other types of model providers, please refer to the following configuration methods.

Custom Model Providers

For custom model providers like Xinference, you can skip the full implementation step. Simply create an empty class called XinferenceProvider and implement an empty validate_provider_credentials method in it.

Detailed Explanation:

• XinferenceProvider is a placeholder class used to identify custom model providers.

• While the validate_provider_credentials method won't be actually called, it must exist because its parent class is abstract and requires all child classes to implement this method. By providing an empty implementation, we can avoid instantiation errors that would occur from not implementing the abstract method.

After initializing the model provider, the next step is to integrate specific llm models provided by the provider. For detailed instructions, please refer to:

Last updated