Error Type

This article summarizes the potential exceptions and corresponding error types that may occur in different types of nodes.

General Error

System error

Typically caused by system issues such as a disabled sandbox service or network connection problems.

Operational Error

Occurs when developers are unable to configure or run the node correctly.

Code Node

Code nodes support running Python and JavaScript code for data transformation in workflows or chat flows. Here are 4 common runtime errors:

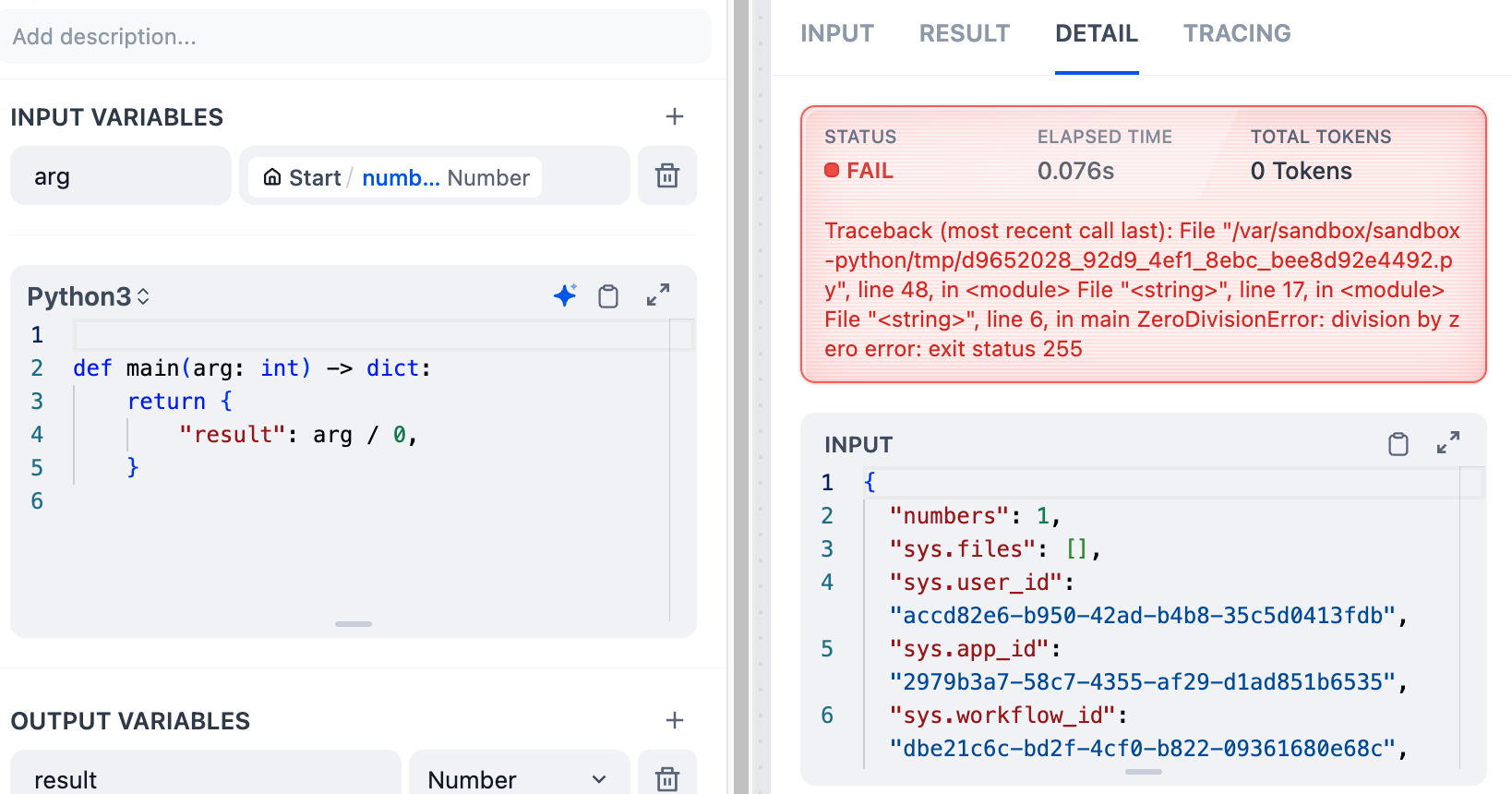

Code Node Error (CodeNodeError)

This error occurs due to exceptions in developer-written code, such as: missing variables, calculation logic errors, or treating string array inputs as string variables. You can locate the issue using the error message and exact line number.

Sandbox Network Issues (System Error)

This error commonly occurs when there are network traffic or connection issues, such as when the sandbox service isn't running or proxy services have interrupted the network. You can resolve this through the following steps:

Check network service quality

Start the sandbox service

Verify proxy settings

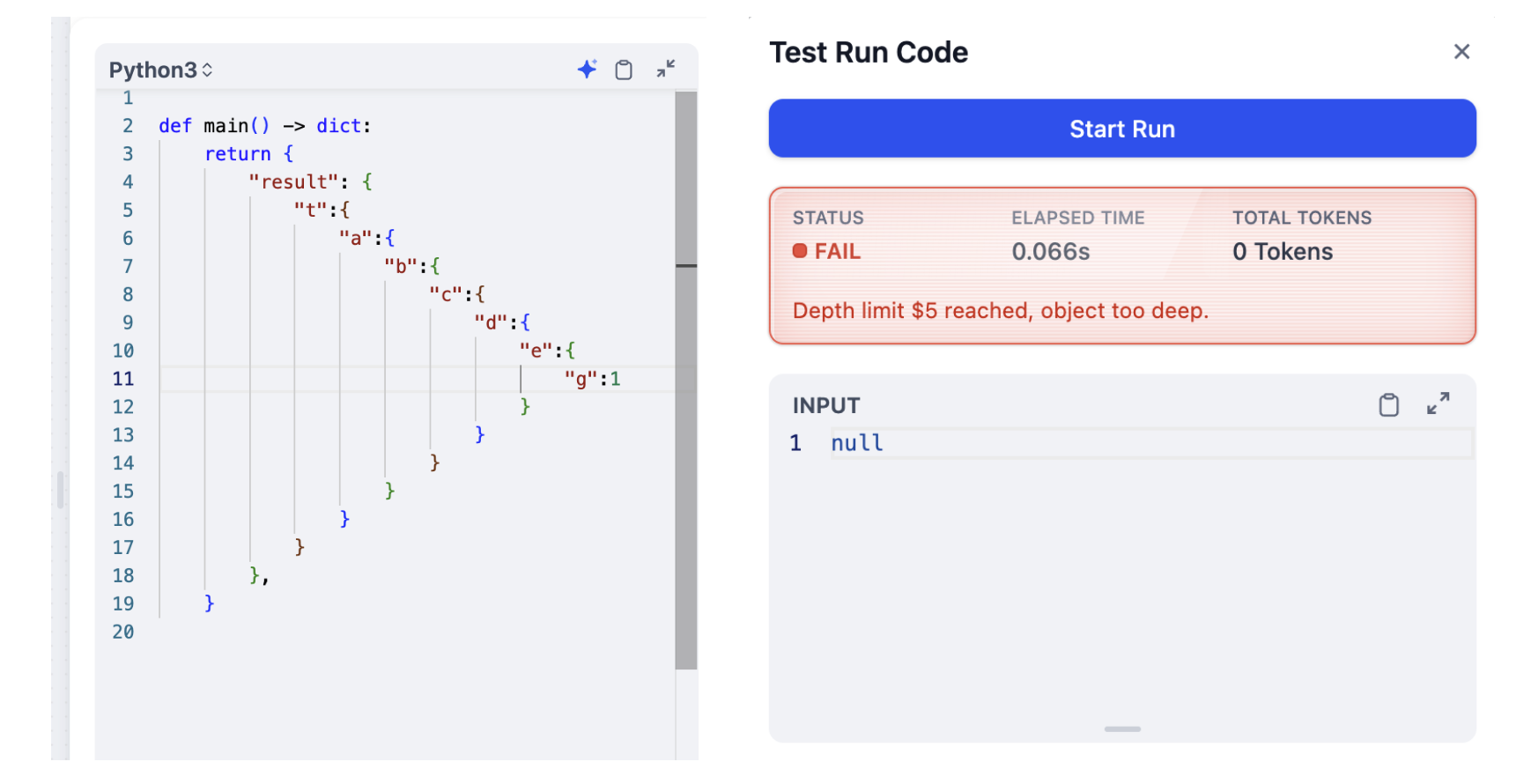

Depth Limit Error (DepthLimitError)

The current node's default configuration only supports up to 5 levels of nested structures. An error will occur if it exceeds 5 levels.

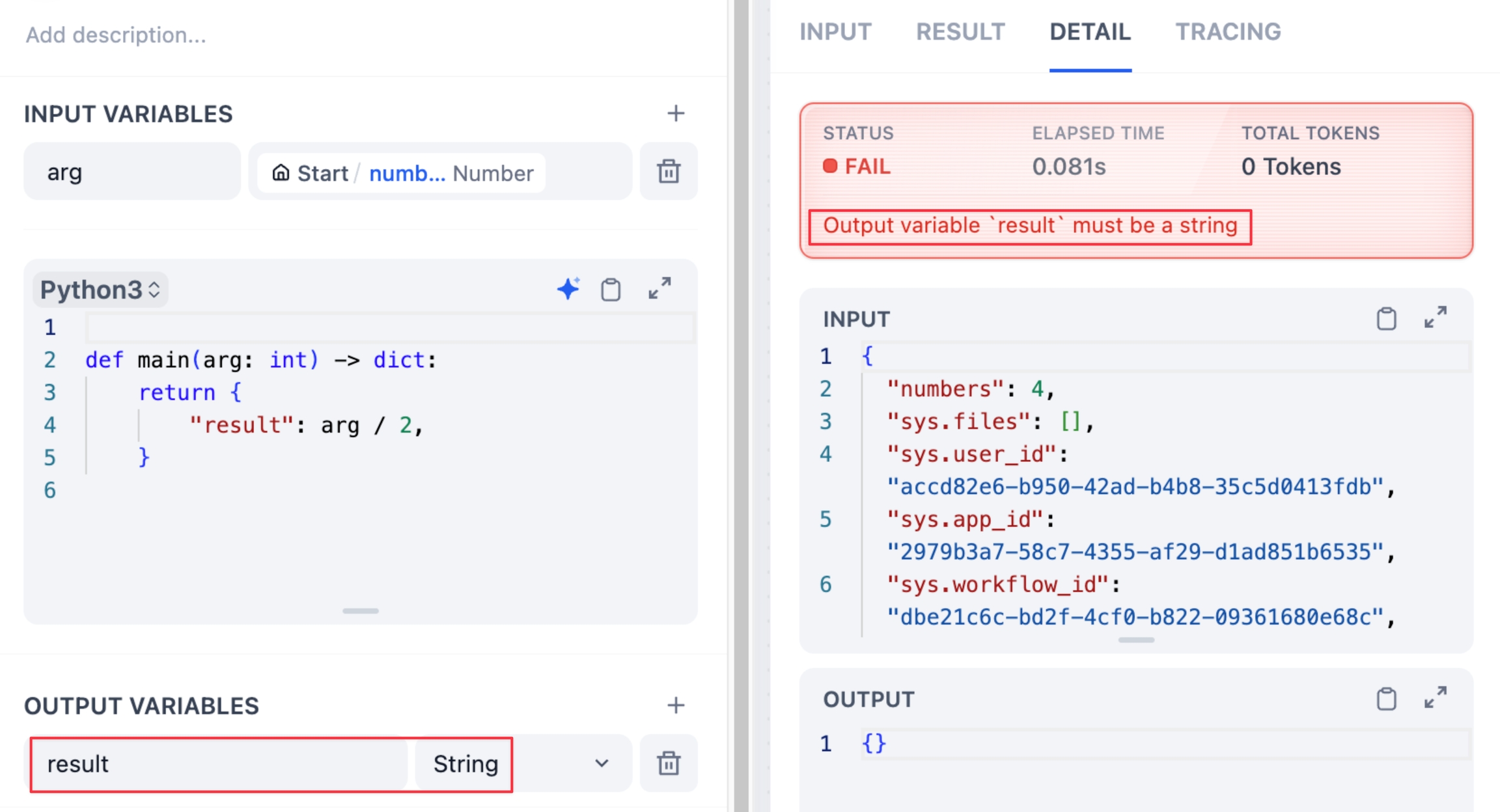

Output Validation Error (OutputValidationError)

An error occurs when the actual output variable type doesn't match the selected output variable type. Developers need to change the selected output variable type to avoid this issue.

LLM Node

The LLM node is a core component of Chatflow and Workflow, utilizing LLM' capabilities in dialogue, generation, classification, and processing to complete various tasks based on user input instructions.

Here are 6 common runtime errors:

Variable Not Found Error (VariableNotFoundError)

This error occurs when the LLM cannot find system prompts or variables set in the context. Application developers can resolve this by replacing the problematic variables.

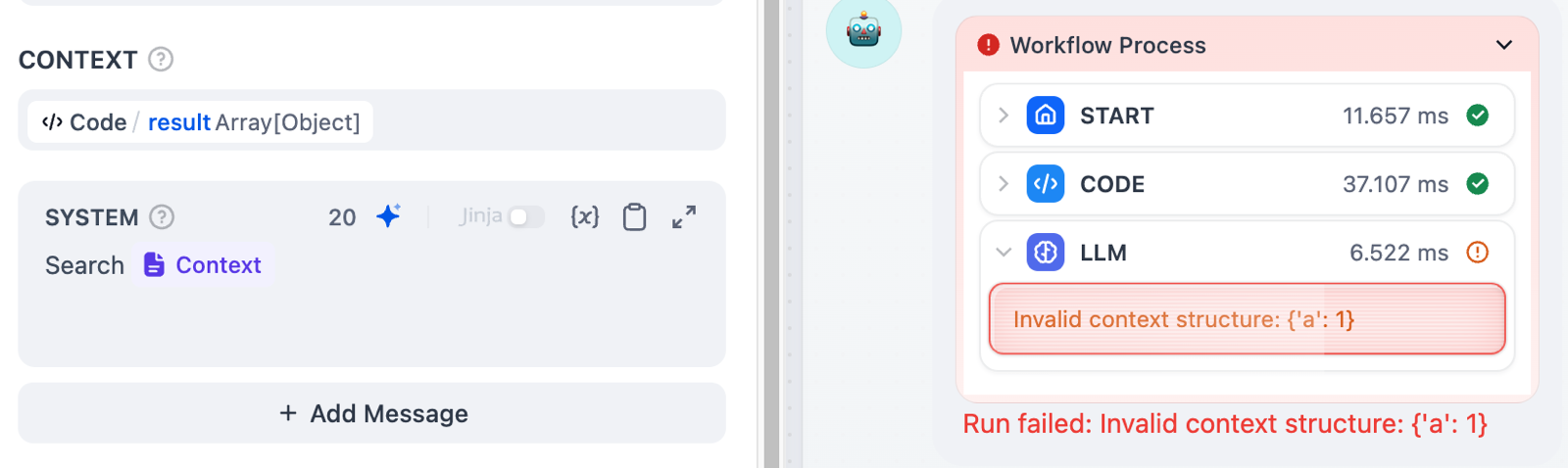

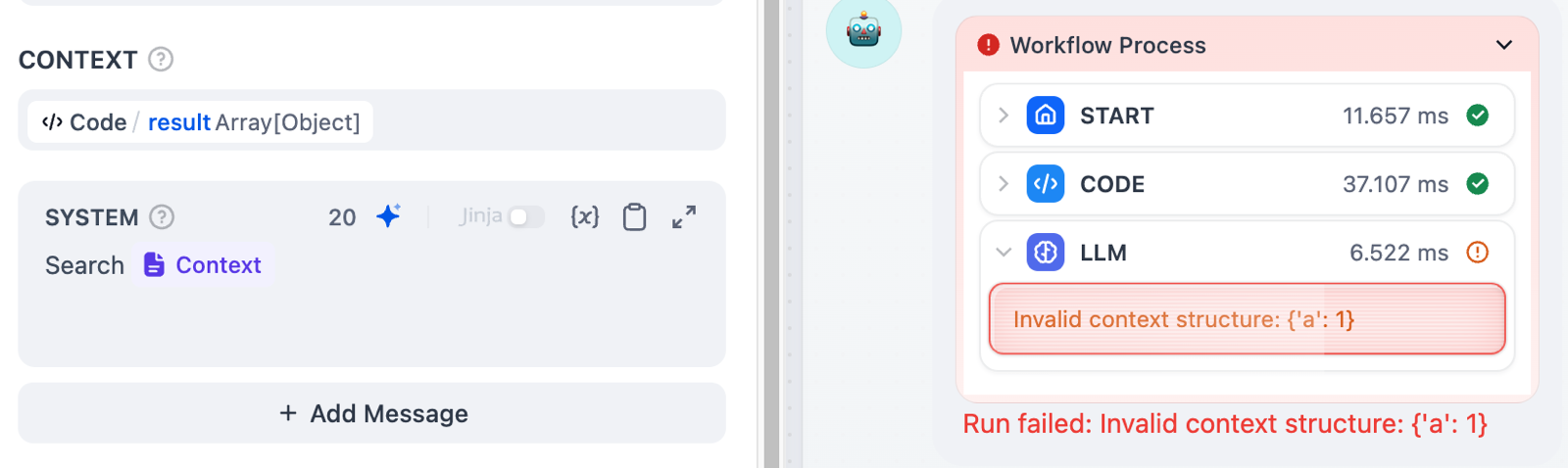

Invalid Context Structure Error (InvalidContextStructureError)

An error occurs when the context within the LLM node receives an invalid data structure (such as

array[object]).Context only supports string (String) data structures.

Invalid Variable Type Error (InvalidVariableTypeError)

This error appears when the system prompt type is not in the standard Prompt text format or Jinja syntax format.

Model Not Exist Error (ModelNotExistError)

Each LLM node requires a configured model. This error occurs when no model is selected.

LLM Authorization Required Error (LLMModeRequiredError)

The model selected in the LLM node has no configured API Key. You can refer to the documentation for model authorization.

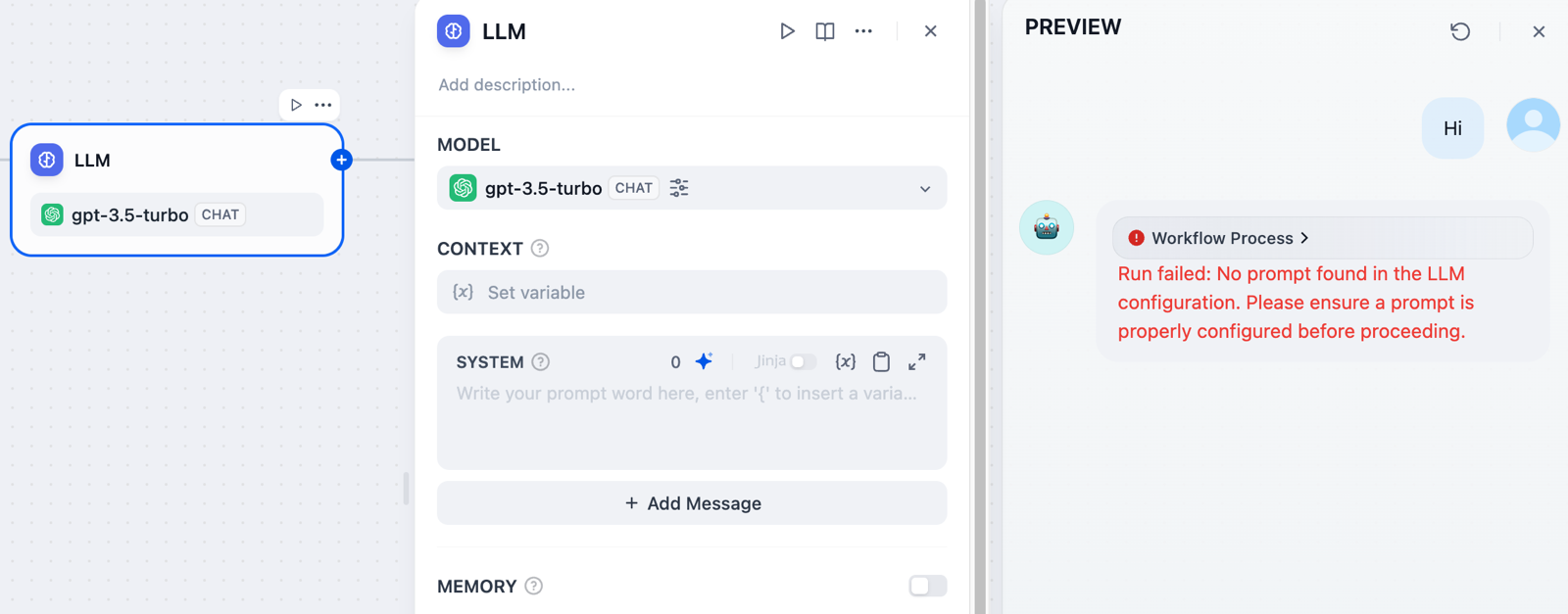

No Prompt Found Error (NoPromptFoundError)

An error occurs when the LLM node's prompt is empty, as prompts cannot be blank.

HTTP

HTTP nodes allow seamless integration with external services through customizable requests for data retrieval, webhook triggering, image generation, or file downloads via HTTP requests. Here are 5 common errors for this node:

Authorization Configuration Error (AuthorizationConfigError)

This error occurs when authentication information (Auth) is not configured.

File Fetch Error (FileFetchError) This error appears when file variables cannot be retrieved.

Invalid HTTP Method Error (InvalidHttpMethodError)

An error occurs when the request header method is not one of the following: GET, HEAD, POST, PUT, PATCH, or DELETE.

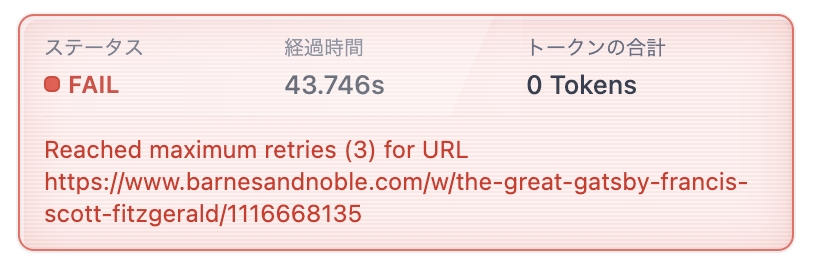

Response Size Error (ResponseSizeError)

HTTP response size is limited to 10MB. An error occurs if the response exceeds this limit.

HTTP Response Code Error (HTTPResponseCodeError) An error occurs when the request response returns a code that doesn't start with 2 (such as 200, 201). If exception handling is enabled, errors will occur for status codes 400, 404, and 500; otherwise, these won't trigger errors.

Tool

The following 3 errors commonly occur during runtime:

Tool Execution Error (ToolNodeError)

An error that occurs during tool execution itself, such as when reaching the target API's request limit.

Tool Parameter Error (ToolParameterError)

An error occurs when the configured tool node parameters are invalid, such as passing parameters that don't match the tool node's defined parameters.

Tool File Processing Error (ToolFileError)

An error occurs when the tool node cannot find the required files.

Last updated