Private Deployment of Ollama + DeepSeek + Dify: Build Your Own AI Assistant

Overview

DeepSeek is an innovative open-source large language model (LLM) that brings a revolutionary experience to AI-powered conversations with its advanced algorithmic architecture and reflective reasoning capabilities. By deploying it privately, you gain full control over data security and system configurations while maintaining flexibility in your deployment strategy.

Dify, an open-source AI application development platform, offers a complete private deployment solution. By seamlessly integrating a locally deployed DeepSeek model into the Dify platform, enterprises can build powerful AI applications within their own infrastructure while ensuring data privacy.

Advantages of Private Deployment:

Superior Performance: Delivers a conversational experience comparable to commercial models.

Isolated Environment: Runs entirely offline, eliminating data leakage risks.

Full Data Control: Retains complete ownership of data assets, ensuring compliance.

Prerequisites

Hardware Requirements:

CPU: ≥ 2 Cores

RAM/GPU Memory: ≥ 16 GiB (Recommended)

Software Requirements:

Docker Compose

Deployment Steps

1. Install Ollama

Ollama is a cross-platform LLM management client (MacOS, Windows, Linux) that enables seamless deployment of large language models like DeepSeek, Llama, and Mistral. Ollama provides a one-click model deployment solution, ensuring that all data remains stored locally for complete security and privacy.

Visit Ollama's official website and follow the installation instructions for your platform. After installation, verify it by running the following command:

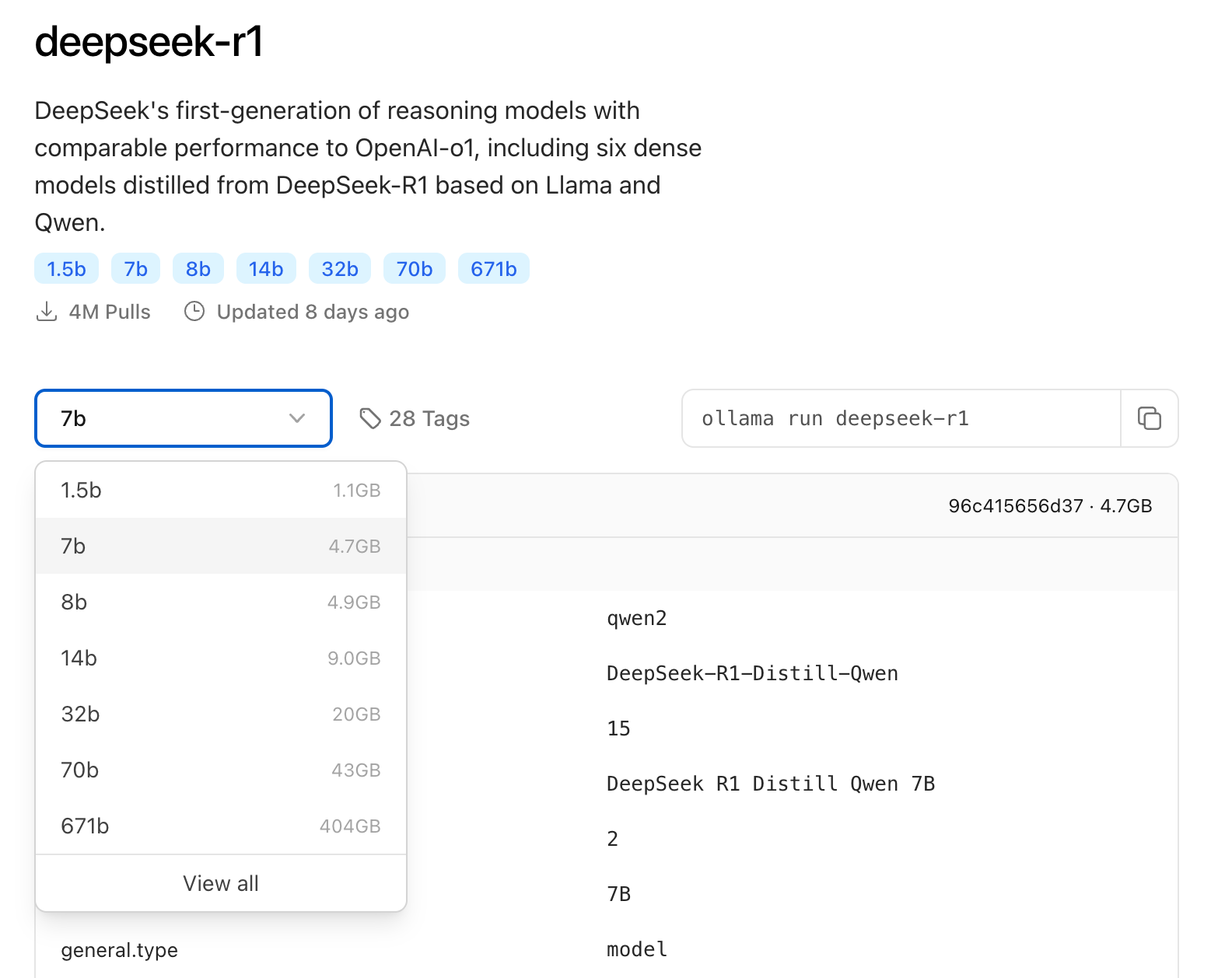

Select an appropriate DeepSeek model size based on your available hardware. A 7B model is recommended for initial installation.

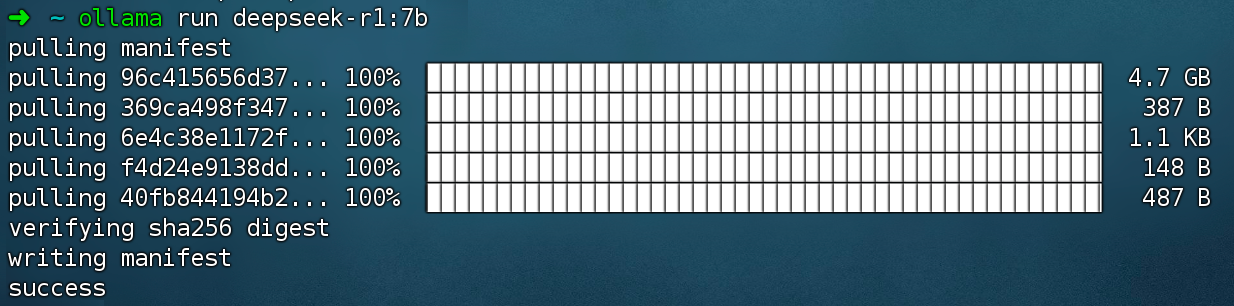

Run the following command to install the DeepSeek R1 model:

2. Install Dify Community Edition

Clone the Dify GitHub repository and follow the installation process:

After running the command, you should see all containers running with proper port mappings. For detailed instructions, refer to Deploy with Docker Compose.

Dify Community Edition runs on port 80 by default. You can access your private Dify platform at: http://your_server_ip

3. Integrate DeepSeek with Dify

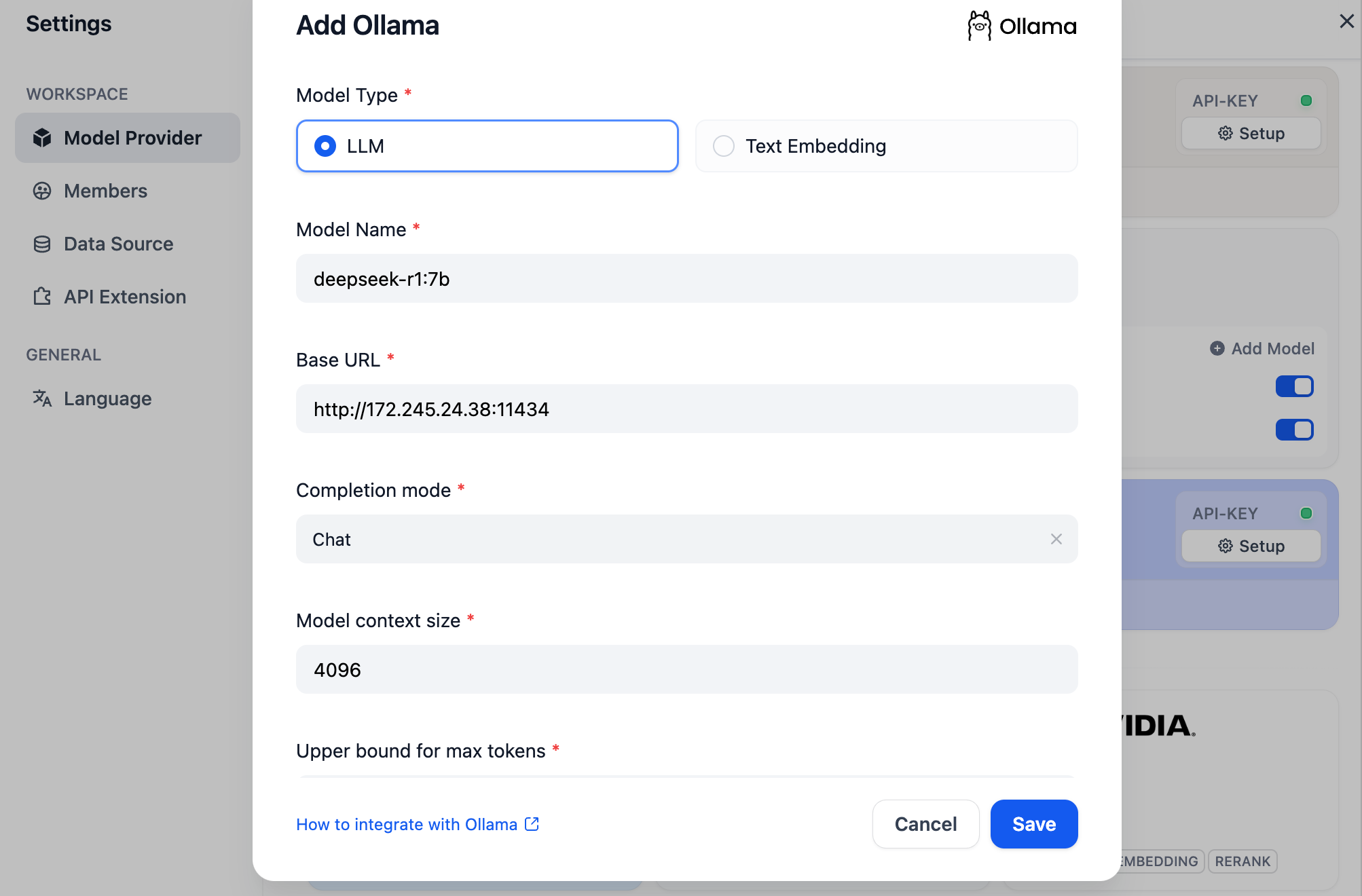

Go to Profile → Settings → Model Providers in the Dify platform. Select Ollama and click Add Model.

Note: The “DeepSeek” option in Model Providers refers to the online API service, whereas the Ollama option is used for a locally deployed DeepSeek model.

Configure the Model:

• Model Name: Enter the deployed model name, e.g., deepseek-r1:7b.

• Base URL: Set the Ollama client’s local service URL, typically http://your_server_ip:11434. If you encounter connection issues, please refer to the FAQ.

• Other settings: Keep default values. According to the DeepSeek model specifications, the max token length is 32,768.

Build AI Applications

DeepSeek AI Chatbot (Simple Application)

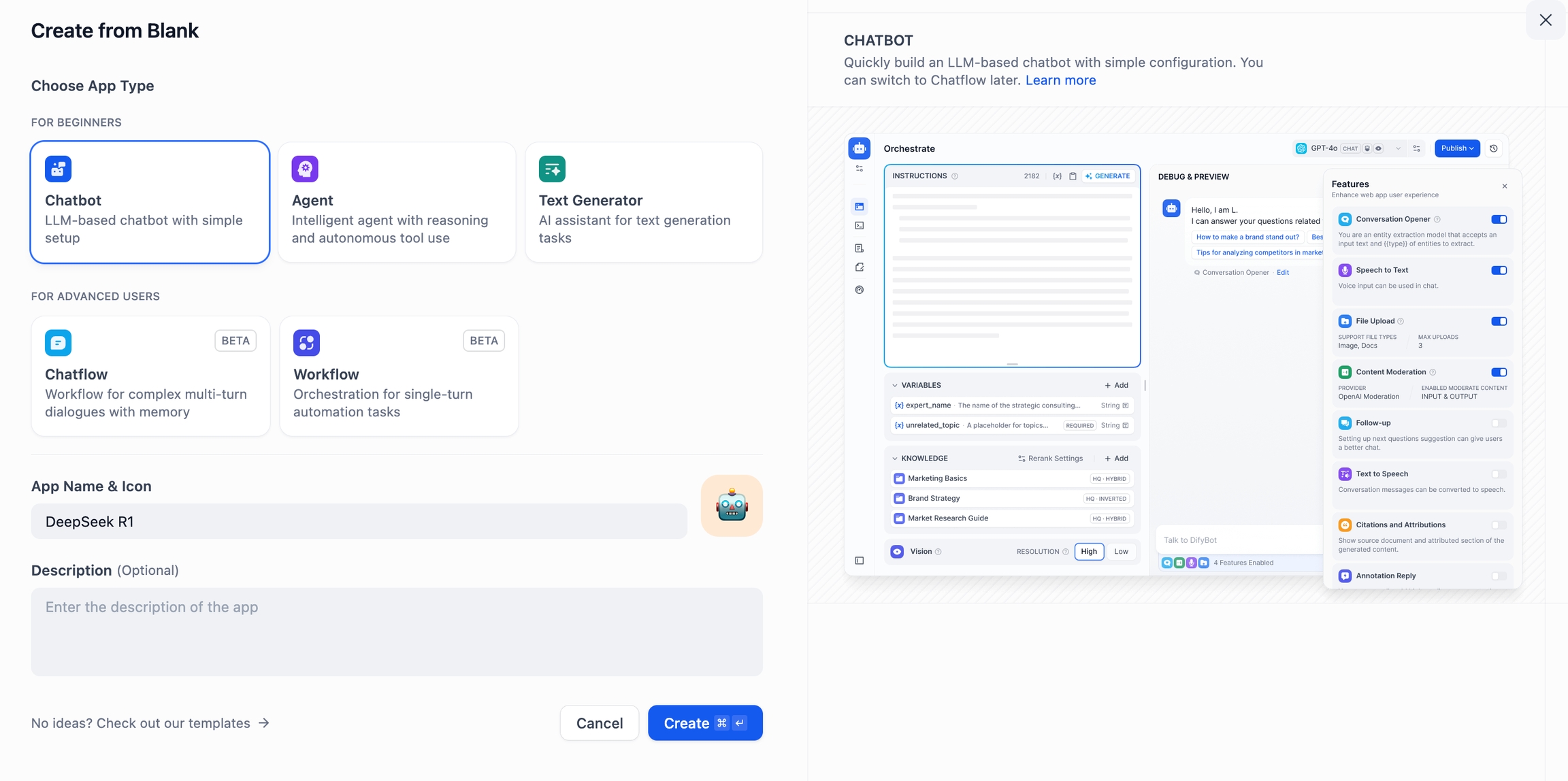

On the Dify homepage, click Create Blank App, select Chatbot, and give it a name.

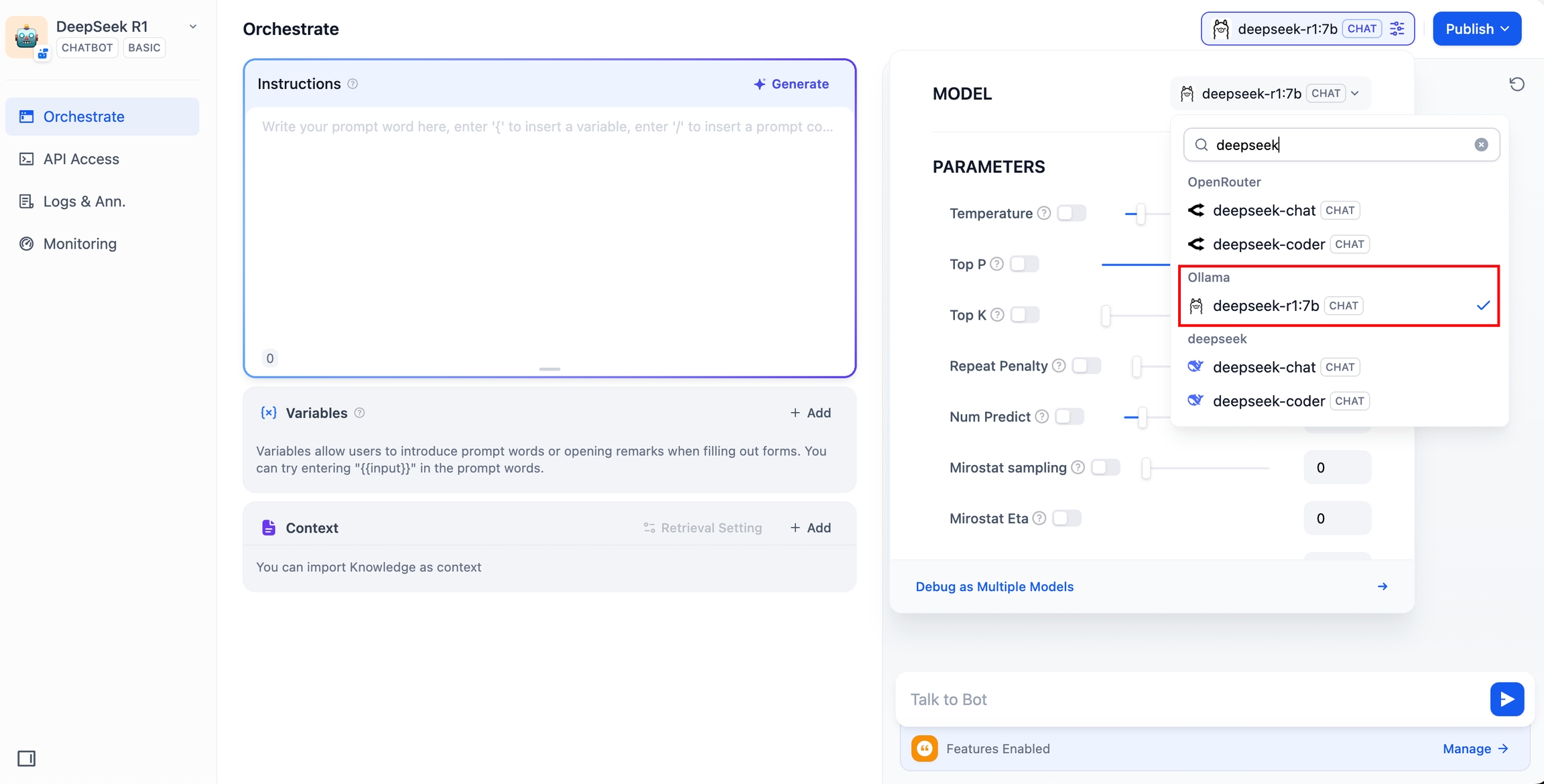

Select the

deepseek-r1:7bmodel under Ollama in the Model Provider section.

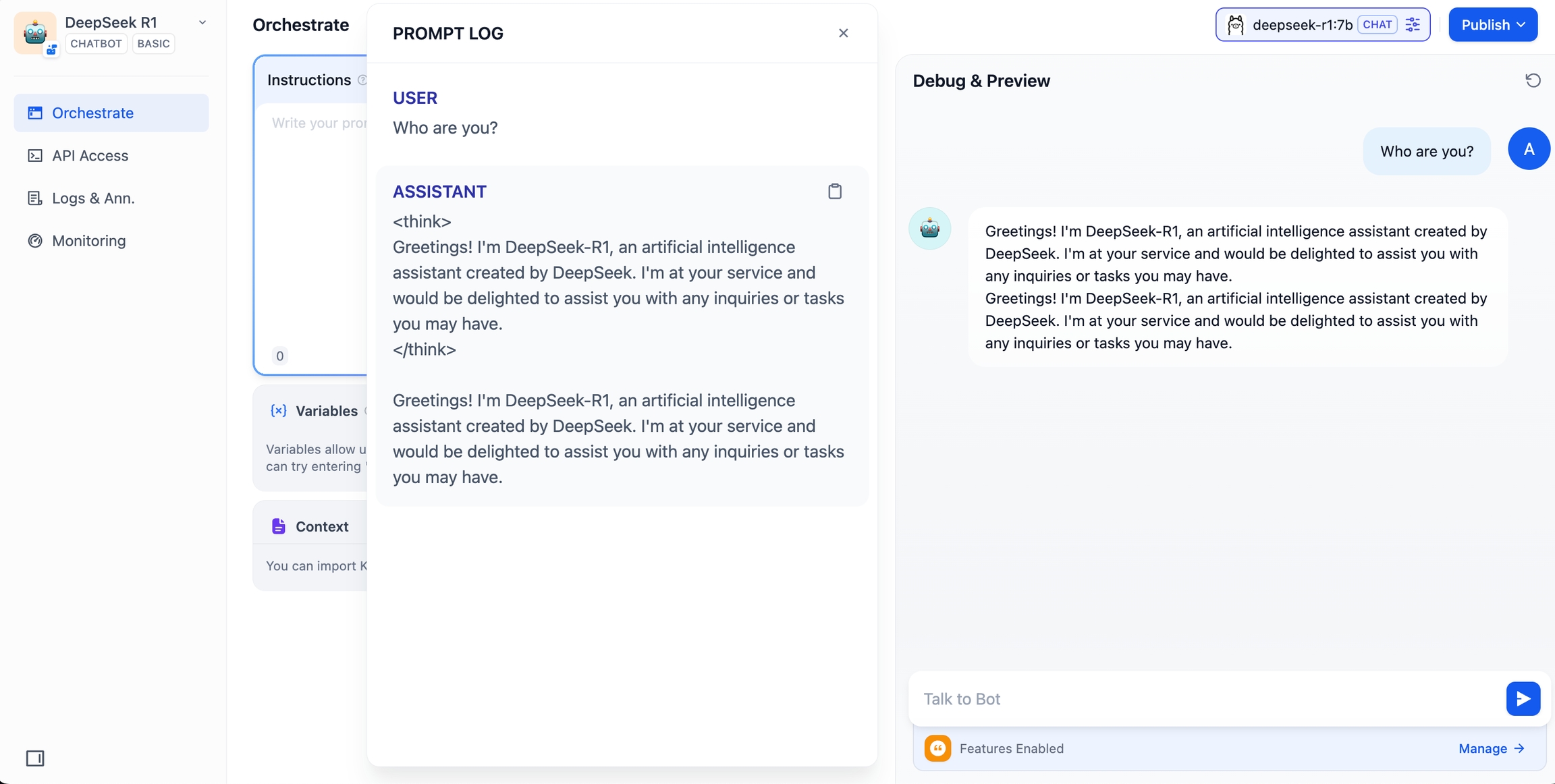

Enter a message in the chat preview to verify the model’s response. If it replies correctly, the chatbot is online.

Click the Publish button to obtain a shareable link or embed the chatbot into other websites.

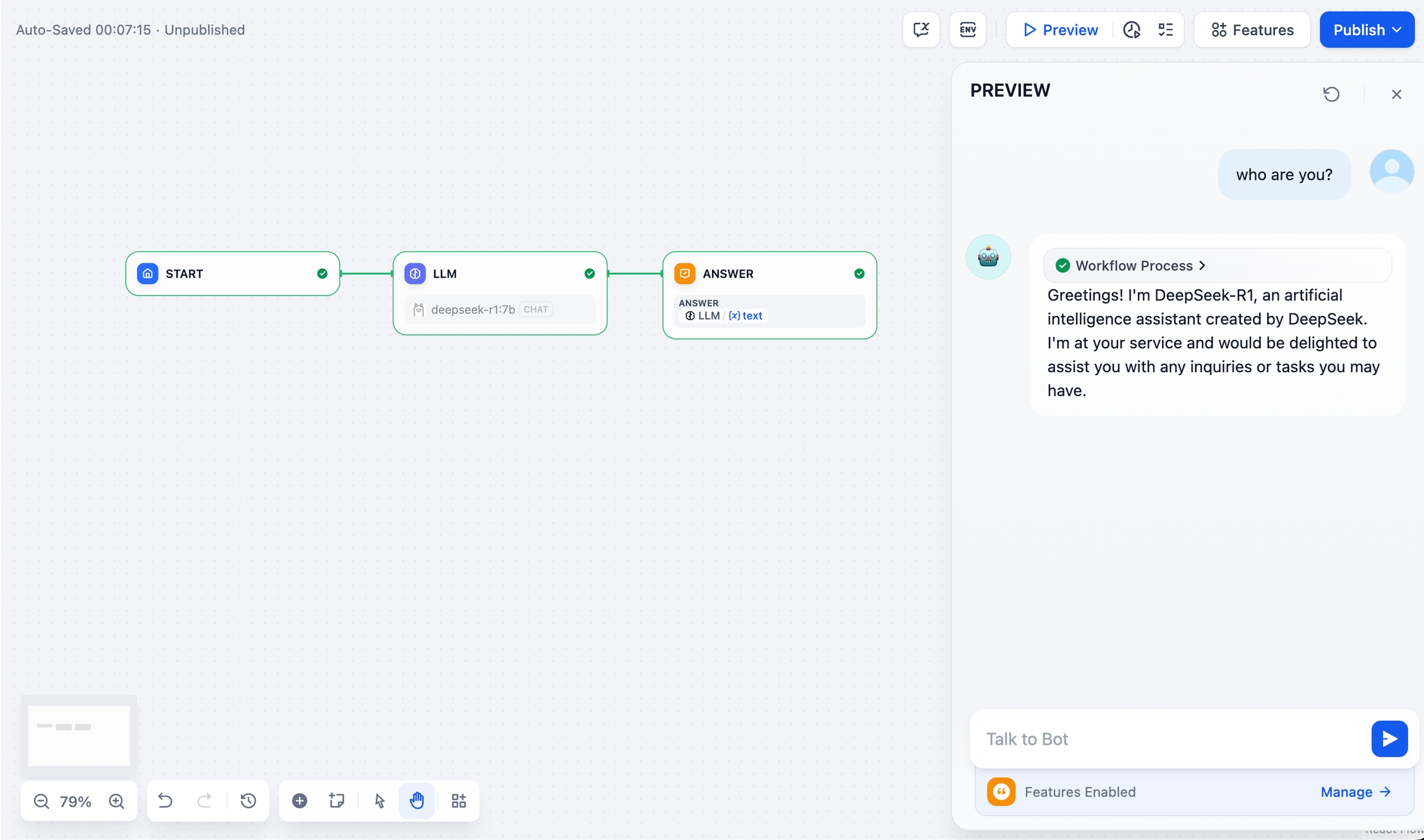

DeepSeek AI Chatflow / Workflow (Advanced Application)

Chatflow / Workflow applications enable the creation of more complex AI solutions, such as document recognition, image processing, and speech recognition. For more details, please check the Workflow Documentation.

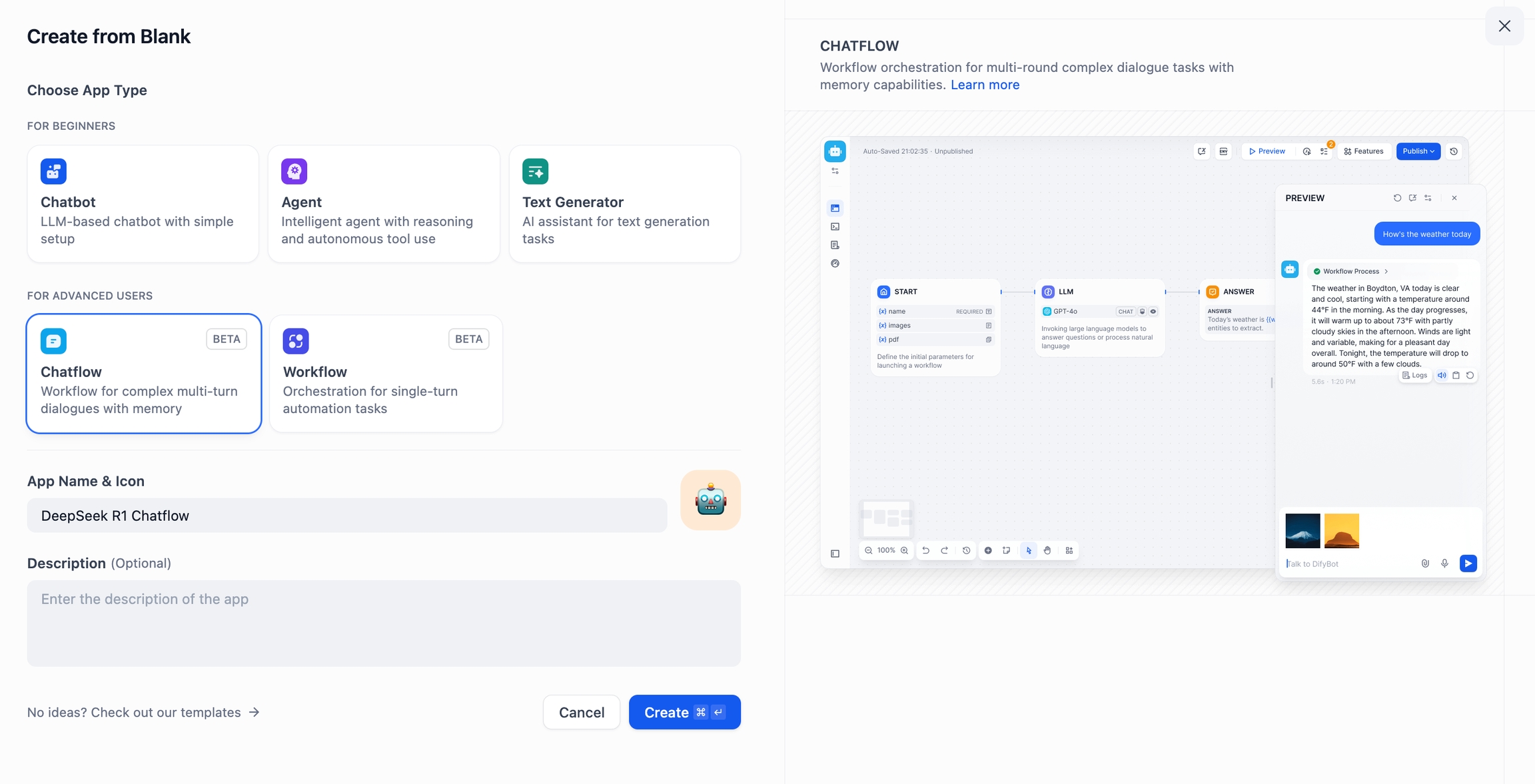

Click Create Blank App, then select Chatflow or Workflow, and name the application.

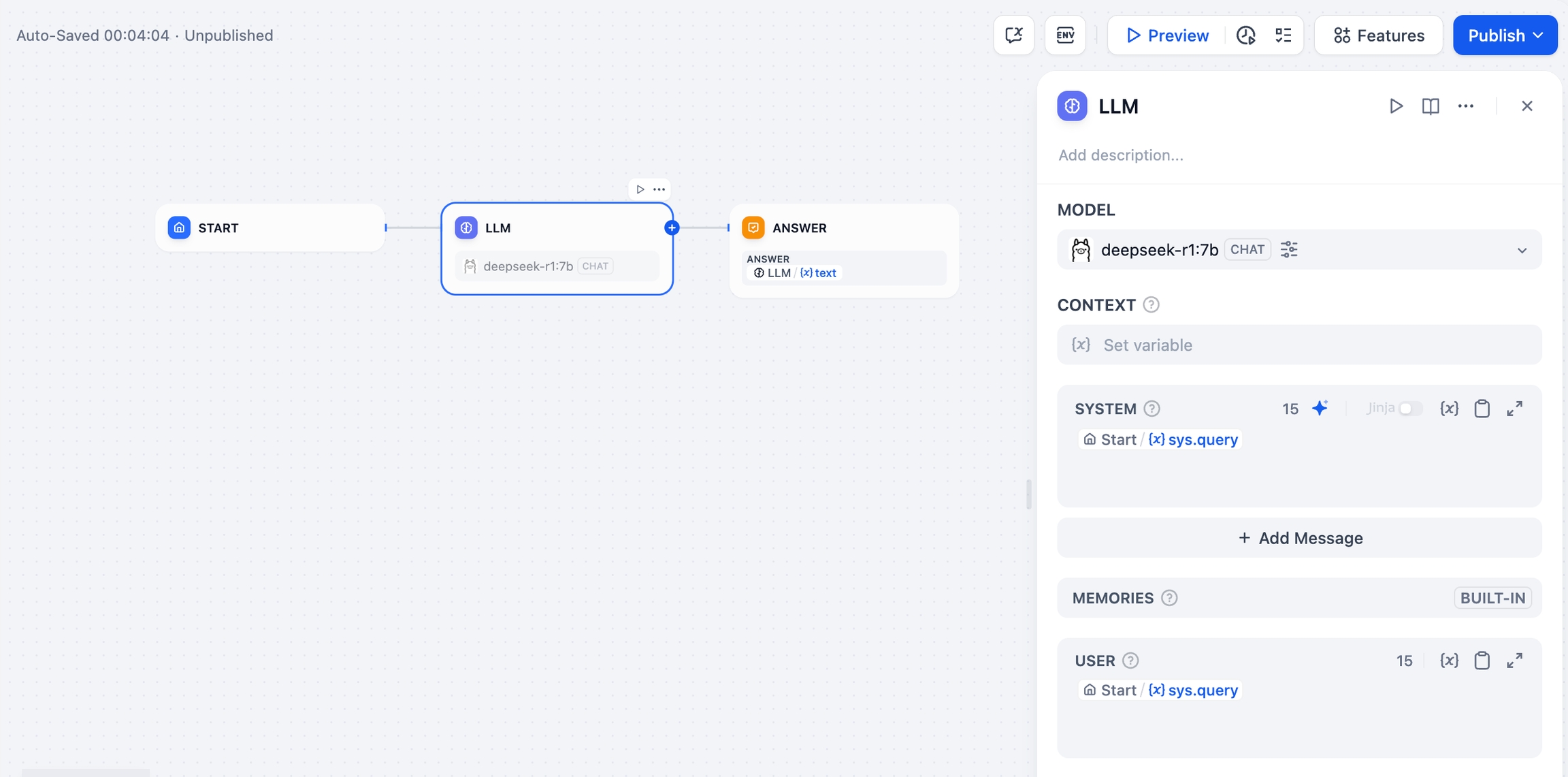

Add an LLM Node, select the

deepseek-r1:7bmodel under Ollama, and use the{{#sys.query#}}variable into the system prompt to connect to the initial node. If you encounter any API issues, you can handle them via Load Balancing or the Error Handling node.Add an LLM node, select the deepseek-r1:7b model under the Ollama framework, and insert the {{#sys.query#}} variable into the system prompt to connect to the initial node. If you encounter any API issues, you can handle them via Load Balancing or the Error Handling node.

Add an End Node to complete the configuration. Test the workflow by entering a query. If the response is correct, the setup is complete.

FAQ

1. Connection Errors When Using Docker

If running Dify and Ollama inside Docker results in the following error:

Cause:

Ollama is not accessible inside the Docker container because localhost refers to the container itself.

Solution:

Setting environment variables on Mac:

If Ollama is run as a macOS application, environment variables should be set using launchctl:

For each environment variable, call

launchctl setenv.Restart Ollama application.

If the above steps are ineffective, you can use the following method:

The issue lies within Docker itself, and to access the Docker host. You should connect to

host.docker.internal. Therefore, replacinglocalhostwithhost.docker.internalin the service will make it work effectively.

Setting environment variables on Linux:

If Ollama is run as a systemd service, environment variables should be set using systemctl:

Edit the systemd service by calling

systemctl edit ollama.service. This will open an editor.For each environment variable, add a line

Environmentunder section[Service]:Save and exit.

Reload

systemdand restart Ollama:

Setting environment variables on Windows:

On windows, Ollama inherits your user and system environment variables.

First Quit Ollama by clicking on it in the task bar.

Edit system environment variables from the control panel.

Edit or create New variable(s) for your user account for

OLLAMA_HOST,OLLAMA_MODELS, etc.Click OK/Apply to save.

Run

ollamafrom a new terminal window.

2. How to Modify the Address and Port of Ollama Service?

Ollama binds 127.0.0.1 port 11434 by default. Change the bind address with the OLLAMA_HOST environment variable.

Last updated